Hello Internet, in today's article we shall why in order to build long lasting solutions, we should keep aside the eternal arguments about what technology to use for building software and rather bring out a large whiteboard and first attempt to solve problems in a time tested way: brainstorming, diagrams and formulae.

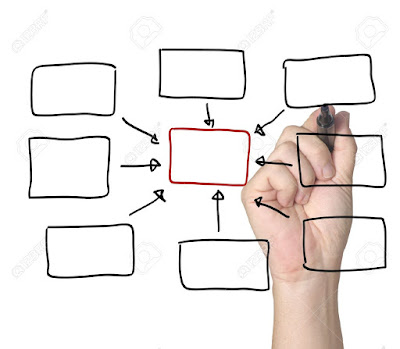

What makes solutions like Google search, YouTube, Uber, Facebook, Picasa, Flickr and Instagram so good at their game? It is very easy to conclude that technology is the ONE answer to such seamless solutions. However, technology is only the means to an end. The same technologies that are available to the top 10 apps / websites are also available to everyone else. In the great levelling playing field called the Internet, easily downloadable and commoditised technology is not what seperates the best from the good. These companies are in fact excelling at something that happens even before technology is applied, or even before the engineers finalise on what technology is best for the situation. It seems very contradictory, but these companies are good at sitting down together in front of a whiteboard, writing down points on a marker, drawing diagrams and paper models and playing out the entire solution with paper and pencil before they enter the technological world of bits, bytes, codes, apps, SaaS and the market.

But just what is it that they are drawing on whiteboards and modelling on paper before sitting down to write programs? And how does that make a difference to the final solution? As it turns out, they are figuring out something very fundamental to the world of computing. Regardless of whether you use Android, iOS, Java, Python or NodeJS, computing devices will understand only two building blocks. Enter data structures and algorithms.

Lasting companies and solutions: What seperates the best from the good

Let's play a small rapid fire game. Try to answer the following questions within seconds of reading.- You need to search for the recipe of a truffle cake. Which site will you head for?

- You are standing at the airport and need a taxi to take you home. Which app do you reach for?

- You have some awesome pictures from your visit to Thailand. Where do you upload your photos?

What makes solutions like Google search, YouTube, Uber, Facebook, Picasa, Flickr and Instagram so good at their game? It is very easy to conclude that technology is the ONE answer to such seamless solutions. However, technology is only the means to an end. The same technologies that are available to the top 10 apps / websites are also available to everyone else. In the great levelling playing field called the Internet, easily downloadable and commoditised technology is not what seperates the best from the good. These companies are in fact excelling at something that happens even before technology is applied, or even before the engineers finalise on what technology is best for the situation. It seems very contradictory, but these companies are good at sitting down together in front of a whiteboard, writing down points on a marker, drawing diagrams and paper models and playing out the entire solution with paper and pencil before they enter the technological world of bits, bytes, codes, apps, SaaS and the market.

|

| Image courtesy: 123rf.com |

But just what is it that they are drawing on whiteboards and modelling on paper before sitting down to write programs? And how does that make a difference to the final solution? As it turns out, they are figuring out something very fundamental to the world of computing. Regardless of whether you use Android, iOS, Java, Python or NodeJS, computing devices will understand only two building blocks. Enter data structures and algorithms.

Data structures and algorithms: What they are

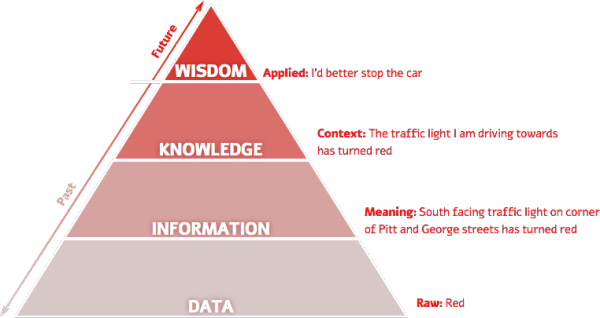

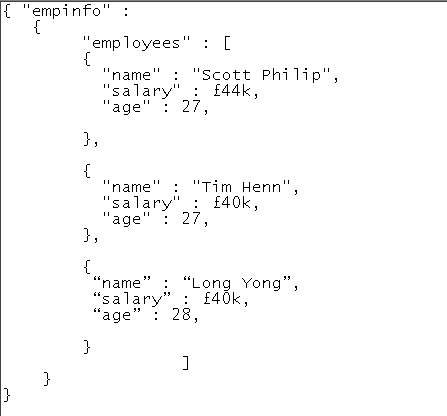

Computing devices are made to store data over a long period of time. Since data storage is such a core functionality in computing, scientists and engineers have turned extensively to mathematics to study the various forms in which data can be represented, such as sets, lists, matrices and the like. Different types of data need to be stored in different forms and there is an entire subject behind the study of how efficiently to store data such that it can be accessed fast when required later. The form in which computing devices store data is called data structure.

In order to store data and fetch it for use later, a computer must go through a set of instructions which when given a piece of data must find the right place inside to data structure to put it and then later use another set of instructions to fetch data quickly from the structure again. Just like data structures, a carefully planned sequence of instructions goes a long way in ensuring that there are no major bottlenecks while retrieving data and that the retrieval is as quick as possible. Such as sequence of instructions is called algorithm.

A well planned combination of data structures and algorithms can mean the difference between a smooth and a frustrating experience for the user. Planning a good combination often requires engineers to abandon a computer for a week or several weeks and simply draw out diagrams and write formulae on a whiteboard or a piece of paper before writing the first line of code.

Using data structures and algorithms: A real life example

To really understand how the choice of data structures and algorithms affects user experience, let us take a real life example.

- Let us say that Eddie has just moved into a new neighbourhood and is invited over to a get-together in his neighbourhood's club. Eddie takes along a sheet of paper and a pen, meets new people and notes down their names and numbers. He notes down names in the order in which he meets the persons and the names are not sorted in any order which makes sense to Eddie when he wants to look up specific names later. However a list works for him right now, since noting down is best done in the simplest way possible. This way, even if he meets three persons together and all of them rapidly dictate their names and numbers to him really fast, he can keep up, since he is simply appending new names to the list and his hand can instinctively reach the end of the list as soon as he hears a new name and number.

- However the list is not sorted in any particular order that makes sense, so Eddie goes home and fishes out his phone book. The phone book is structured such that it is easy to find names in a snap. The names are arranged in alphabetic order, with pages having markers for alphabets. To find a name, Eddie just goes to the page which is marked with the alphabet with which the name of the contact he is seeking starts. E.g. to find the number of a friend named Carlos, Eddie simply goes to the page marked with letter C, on which he can find all his contacts with names starting with C. Carlos will be right up there.

Image courtesy: amazon.com - Like any good affable person, Eddie wants to wish his friends on their birthdays and anniversaries. Let's say the day is 5th March. Alas, the phone book has no good way to pick out if any of his contacts has an event going for him / her that day. Even if he records each contact's birthday against his / her name, combing through each contact's record to find out if this day is special for him / her isn't a very attractive option. But Eddie is smart. He has a good calendar, where he marks the name of the person and the occasion related to the that person, against that date on the calendar. Eddie just looks up the date on the calendar and calls the person to make his / her day.

Image courtesy: weknowyourdreams.com - It is the soccer world cup final and Eddie wants to invite over all his soccer loving friends to his home to watch the match on his big screen smart TV at night. However, not all his friends are into sports and not everyone likes to stay up at night to watch TV. Again, going through his phone book is not the best option to gather a list of people who like sports and do not mind staying up late. Luckily, Eddie has been fairly inquisitive while talking to his friends and has smartly made categorised lists on who likes soccer and who are night owls. He just needs to look at his categorised lists to find friends who would fit the occasion. He can look up the names under the categories and then use the phone book to find the phone number and then call each friend.

Image courtesy: lifehack.org - Eddie decides to throw a barbecue party at his home's lawn. To make it personal, he decides to visit each house to invite his friends in person. He decides to bike around the neighbourhood and distribute hand drawn invitation cards to each family. But what route should he take to cover each family's home and do it in the shortest route possible? Will his phone book or calendar help here? Of course not. Perhaps a map of the neighbourhood will. And Eddie does have one. So he sets down to work, drawing a good route with a pencil over the map. Before long, the cards have been distributed and Eddie is set to have an awesome barbecue party.

Image courtesy: 123rf.com

The above illustration shows how having different representations of the same small neighbourhood and its people can drastically improve or destroy the experience of having to use that information. Imagine what it would be for Google or Uber to not use the right data structures and algorithms for the right purpose, with the humongous amount of data that they have.

Conclusion

Using the right data structures and algorithms seems to be common sense, but more too often, companies get caught up in the lure of technology and fail to pay enough attention to the basic building blocks and the core foundations of data structures and algorithms. Most companies in fact brag about having used cutting edge technologies such as their solutions being built on NoSQL, using web sockets or using cloud computing and we have very few companies that boast their algorithm can allow them to service 10000 users at once.

Since the inception of computing, data structures and algorithms have been unsung heroes and they still continue to be unsung, but that does not change the fact that they are heroes.